Paul Sawers

Big tech companies are plowing money into AI startups, which could help them dodge antitrust concerns

Another week, and another round of crazy cash injections and valuations emerged from the AI realm.

Zen Educate raises $37M and acquires Aquinas Education as it tries to address the teacher shortage

Zen Educate, an online marketplace that connects schools with teachers, has raised $37 million in a Series B round of funding.

The raise comes amid a growing teacher shortage crisis both sides of the pond, with a recent report from ADP Research Institute noting that the global pandemic exacerbated the existing supply/demand imbalance due to “stagnant wages and a stressful work environment.”

Founded out of London in 2017, Zen Educate replaces traditional third-party recruitment agencies that often use analog workflows and charge exorbitant fees. Zen Educate digitizes everything through a self-serve platform, removing pricey intermediaries from the equation in the process. Through the platform, teachers and schools create profiles and Zen Educate can automatically match the two entities based on their compatibility– this uses data such as proximity, skills, experience, among other preferences.

Schools can use Zen Educate to hire for full-time roles, but teachers can also use it to more easily find temporary or part-time roles that fit around their lives.

“Like in all areas, educators are looking for greater flexibility in their work, and thus, there is a greater need for flexible working solutions in education like Zen Educate,” Zen Educate co-founder and CEO Slava Kremerman told TechCrunch.

On top of that, Zen Educate also promises higher pay, given that it takes a smaller cut than incumbent agencies

“The average incumbent industry take rate is between 35-38%,” Kremerman said. “We’re a little over half that. As a result, teachers earn more and schools save money.”

Expansion

Zen Educate raised a $21 million Series A round in late 2022 as it sought to expand into the U.S. market after soft-launching in Minneapolis. Today, the company operates across four additional states — Texas, Colorado, California, and Arizona — on top of 11 regions in England. And more than 15% of its 300 workforce are now based in the U.S.

“From the Minneapolis soft-launch, we are now the second-largest provider in the state,” Kremerman said. “We are live across five states and we are working with nine of the top 200 largest school districts in the U.S.”

Kremerman also said that its technology-based approach has helped it adapt to the different regulatory environment in the U.S.

“Licensing is state-specific, whereas England and Wales have a standardised national standard,” Kremerman said. “We’re able to use our credentialing technology to adapt and roll out quickly between states, whereas most traditional staffing firms struggle with this.”

With another $37 million in the bank, the company said it’s planning to expand into more markets across the U.S. and U.K., and launch new software for school administrators, which includes adding to its school workforce management software that packs tools for credentialing, compliance, and absence management.

Furthermore, Zen Educate is also bolstering its resources through acquisitions, announcing its second-ever acquisition today with the purchase of teacher staffing agency Aquinas Education. The company said that it intends to complete several more acquisitions both in the U.S. and U.K.

Notably, Aquinas Education counts former professional soccer player turned TV presenter Jermaine Jenas as one of its owners, and following this acquisition Jenas now joins Zen Educate as brand ambassador.

Zen Educate’s Series B round was led by Round2 Capital, with participation from Adjuvo, Brighteye Ventures, FJ Labs, Ascension Ventures, and several angels.

Sona, a frontline workforce management platform, raises $27.5M with eyes on US expansion

Sona, a workforce management platform for frontline employees, has raised $27.5 million in a Series A round of funding.

More than two-thirds of the U.S. workforce are reportedly in frontline jobs, which might be anything from customer service and healthcare to retail environments and hospitality. But managing this vast workforce, ensuring roles are filled and service is delivered, is resource intensive. That is where Sona has been setting out to help since its foundation three years ago.

“Sona intelligently deploys our customers’ largest cost base — frontline labour,” Sona’s co-founder, Steffen Wulff Petersen, told TechCrunch. “This not only optimises their cost base, it also directly drives more revenue — you can’t sell food or deliver care without staff being scheduled correctly.”

Founded in London in 2021, Sona helps companies manage just about every facet of their frontline workforce, from shift scheduling, timesheets, and soliciting feedback to absence management and connecting with agencies to ensure shifts are covered during staff shortages.

Managers typically access Sona via a web portal, while workers access the platform via a mobile app with which they can complete timesheets, view available shifts and communicate with managers. Companies integrate Sona with their internal systems to ensure all the data flows through and between the various departments and stakeholders.

As one might expect in this day and age, Sona says it uses AI to automate many of the processes involved in managing a workforce, including optimizing rosters using data gleaned from workers’ contracts, such as their terms of employment, working preferences and availability. So, less time-consuming manual admin is the name of the game.

“Running a business with a large frontline workforce is primarily about ensuring the right people are in the right place at the right time,” Sona’s co-founder and CTO, Ben Dixon, told TechCrunch. “Sona becomes the central jumping off point for a large proportion of our customers’ operations, which means we integrate with nearly all of their other systems — from care management and point-of-sale, to single-sign-on and ERP (enterprise resource planning). It’s this deep level of integration that facilitates our AI product, because we’re the one system that can provide a unified, real-time view of data across the whole business.”

Besides legacy players such as PeoplePlanner in social care and Selima in hospitality, there is no shortage of well-funded startups targeting a similar space to what Sona operates in — there is ConnectTeam and Homebase for starters, the latter of which announced a $60 million fundraise just last month.

Petersen says that it’s setting out to differentiate from at least some of these companies by focusing on larger enterprises, meshing “consumer-grade design” with features required by more complex multi-site operations.

“Most newer, VC-backed players in the workforce management space are built for SMBs, with an easy and simple self-signup product,” Petersen told TechCrunch. “That’s a great approach for small businesses with 1-10 sites, and there’s millions of those businesses to target. We rarely cross paths with the SMB vendors because enterprise customers need the opposite product — one that handles deep complexity.”

Indeed, Sona’s pitch isn’t that it’s quick to deploy: Petersen states that the demo alone takes three hours, and implementation takes more like several months. “Think Salesforce versus Pipedrive,” Petersen said. “We pass leads on to some of the SMB vendors when customers don’t meet our enterprise criteria.”

Expansion

Sona is currently live across the social care and hospitality industries in the U.K., where it counts the likes of Gleneagles and Estelle Manor as customers. With another $27.5 million in the bank, the company is now gearing up to expand further afield — and a clue to its target markets lie in its new lead investor.

The Series A round was led by Menlo Park-based VC firm Felicis, which has previously exited investments like Ring to Amazon, Fitbit to Google, and publicly-traded Shopify. Other notable backers include Google’s Gradient Ventures, which led Sona’s seed round two years ago. Antler, SpeedInvest, Northzone and Bag Ventures also participated in the latest round.

Sona has now raised north of $40 million since its inception, and the company said it will use its fresh cash injection to “build more advanced AI capabilities” and accelerate its international plans, which will include its first U.S. foray.

“The U.S. will be an important market for Sona. We now have both Felicis and Gradient onboard, have hired our first two US based employees, and have signed our first six-figure Alpha customer,” Petersen said.

Fairgen ‘boosts’ survey results using synthetic data and AI-generated responses

Surveys have been used to gain insights on populations, products and public opinion since time immemorial. And while methodologies might have changed through the millennia, one thing has remained constant: The need for people, lots of people.

But what if you can’t find enough people to build a big enough sample group to generate meaningful results? Or, what if you could potentially find enough people, but budget constraints limit the amount of people you can source and interview?

This is where Fairgen wants to help. The Israeli startup today launches a platform that uses “statistical AI” to generate synthetic data that it says is as good as the real thing. The company is also announcing a fresh $5.5 million fundraise from Maverick Ventures Israel, The Creator Fund, Tal Ventures, Ignia, and a handful of angel investors, taking its total cash raised since inception to $8 million.

‘Fake data’

Data might be the lifeblood of AI, but it has also been the cornerstone of market research since forever. So when the two worlds collide, as they do in Fairgen’s world, the need for quality data becomes a little bit more pronounced.

Founded in Tel Aviv, Israel, in 2021, Fairgen was previously focused on tackling bias in AI. But in late 2022, the company pivoted to a new product, Fairboost, which it is now launching out of beta.

Fairboost promises to “boost” a smaller dataset by up to three times, enabling more granular insights into niches that may otherwise be too difficult or expensive to reach. Using this, companies can train a deep machine learning model for each dataset they upload to the Fairgen platform, with statistical AI learning patterns across the different survey segments.

The concept of “synthetic data” — data created artificially rather than from real-world events — isn’t novel. Its roots go back to the early days of computing, when it was used to test software and algorithms, and simulate processes. But synthetic data, as we understand it today, has taken on a life of its own, particularly with the advent of machine learning, where it is increasingly used to train models. We can address both data scarcity issues as well as data privacy concerns by using artificially-generated data that contains no sensitive information.

Fairgen is the latest startup to put synthetic data to the test, and it has market research as its primary target. It’s worth noting that Fairgen doesn’t produce data out of thin air, or throw millions of historical surveys into an AI-powered melting pot — market researchers need to run a survey for a small sample of their target market, and from that, Fairgen establishes patterns to expand the sample. The company says it can guarantee at least a two-fold boost on the original sample, but on average, it can achieve a three-fold boost.

In this way, Fairgen might be able to establish that someone of a particular age-bracket and/or income level is more inclined to answer a question in a certain way. Or, combine any number of data points to extrapolate from the original data set. It’s basically about generating what Fairgen co-founder and CEO Samuel Cohen says are “stronger, more robust segments of data, with a lower margin of error.”

“The main realisation was that people are becoming increasingly diverse — brands need to adapt to that, and they need to understand their customer segments,” Cohen explained to TechCrunch. “Segments are very different — Gen Zs think differently from older people. And in order to be able to have this market understanding at the segment level, it costs a lot of money, takes a lot of time and operational resources. And that’s where I realized the pain point was. We knew that synthetic data had a role to play there.”

An obvious criticism — one that the company concedes that they have contended with — is that this all sounds like a massive shortcut to having to go out into the field, interview real people and collect real opinions.

Surely any under-represented group should be concerned that their real voices are being replaced by, well, fake voices?

“Every single customer we talked to in the research space has huge blind spots — totally hard-to-reach audiences,” Fairgen’s head of growth, Fernando Zatz, told TechCrunch. “They actually don’t sell projects because there are not enough people available, especially in an increasingly diverse world where you have a lot of market segmentation. Sometimes they cannot go into specific countries; they cannot go into specific demographics, so they actually lose on projects because they cannot reach their quotas. They have a minimum number [of respondents], and if they don’t reach that number, they don’t sell the insights.”

Fairgen isn’t the only company applying generative AI to the field of market research. Qualtrics last year said it was investing $500 million over four years to bring generative AI to its platform, though with a substantive focus on qualitative research. However, it is further evidence that synthetic data is here, and here to stay.

But validating results will play an important part in convincing people that this is the real deal and not some cost-cutting measure that will produce suboptimal results. Fairgen does this by comparing a “real” sample boost with a “synthetic” sample boost — it takes a small sample of the data set, extrapolates it, and puts it side-by-side with the real thing.

“With every single customer we sign up, we do this exact same kind of test,” Cohen said.

Statistically speaking

Cohen has an MSc in statistical science from the University of Oxford, and a PhD in machine learning from London’s UCL, part of which involved a nine-month stint as research scientist at Meta.

One of the company’s co-founders is chairman Benny Schnaider, who was previously in the enterprise software space, with four exits to his name: Ravello to Oracle for a reported $500 million in 2016; exited Qumranet to Red Hat for $107 million in 2008; P-Cube to Cisco for $200 million in 2004; and Pentacom to Cisco for $118 in 2000.

And then there’s Emmanuel Candès, professor of statistics and electrical engineering at Stanford University, who serves as Fairgen’s lead scientific advisor.

This business and mathematical backbone is a major selling point for a company trying to convince the world that fake data can be every bit as good as real data, if applied correctly. This is also how they’re able to clearly explain the thresholds and limitations of its technology — how big the samples need to be to achieve the optimum boosts.

According to Cohen, they ideally need at least 300 real respondents for a survey, and from that Fairboost can boost a segment size constituting no more than 15% of the broader survey.

“Below 15%, we can guarantee an average 3x boost after validating it with hundreds of parallel tests,” Cohen said. “Statistically, the gains are less dramatic above 15%. The data already presents good confidence levels, and our synthetic respondents can only potentially match them or bring a marginal uplift. Business-wise, there is also no pain point above 15% — brands can already take learnings from these groups; they are only stuck at the niche level.”

The no-LLM factor

It’s worth noting that Fairgen doesn’t use large language models (LLMs), and its platform doesn’t generate “plain English” responses à la ChatGPT. The reason for this is that an LLM will use learnings from myriad other data sources outside the parameters of the study, which increases the chances of introducing bias that is incompatible with quantitative research.

Fairgen is all about statistical models and tabular data, and its training relies solely on the data contained within the uploaded dataset. That effectively allows market researchers to generate new and synthetic respondents by extrapolating from adjacent segments in the survey.

“We don’t use any LLMs for a very simple reason, which is that if we were to pre-train on a lot of [other] surveys, it would just convey misinformation,” Cohen said. “Because you’d have cases where it’s learned something in another survey, and we don’t want that. It’s all about reliability.”

In terms of business model, Fairgen is sold as a SaaS, with companies uploading their surveys in whatever structured format (.CSV, or .SAV) to Fairgen’s cloud-based platform. According to Cohen, it takes up to 20 minutes to train the model on the survey data it’s given, depending on the number of questions. The user then selects a “segment” (a subset of respondents that share certain characteristics) — e.g., “Gen Z working in industry x,” — and then Fairgen delivers a new file structured identically to the original training file, with the exact same questions, just new rows.

Fairgen is being used by BVA and French polling and market research firm IFOP, which have already integrated the startup’s tech into their services. IFOP, which is a little like Gallup in the U.S., is using Fairgen for polling purposes in the European elections, though Cohen thinks it might end up getting used for the U.S. elections later this year, too.

“IFOP are basically our stamp of approval, because they have been around for like 100 years,” Cohen said. “They validated the technology and were our original design partner. We’re also testing or already integrating with some of the largest market research companies in the world, which I’m not allowed to talk about yet.”

UK challenger bank Monzo nabs another $190M as US expansion beckons

Monzo has raised another £150 million ($190 million), as the challenger bank looks to expand its presence internationally — particularly in the U.S.

The new round comes just two months after Monzo raised £340 million ($425 million), meaning the London-based company has now raised north of $610 million in 2024, and $1.5 billion since its inception nine years ago.

The first tranche of the Series I round saw Alphabet’s CapitalG and Google’s GV make a rare co-investment, alongside notable backers including HongShan Capital (formerly Sequoia Capital China), Passion Capital and Tencent. This extension saw existing investors such as CapitalG throwing more cash into the pot, alongside new backer Hedosophia, which had previously backed Monzo rival, Wise.

In March, Monzo said its pre-money valuation was £3.6 billion ($4.6 billion), translating to a post-money valuation of £4 billion ($5 billion). Now, the company says its post-money figure is £4.1 billion ($5.2 billion), meaning not much has changed in the past two months.

While Monzo is mostly known in its domestic U.K. market, it has been trying to crack the U.S. for some years. But without a banking license of its own, it has been operating as a mobile banking app in partnership with Ohio’s Sutton Bank since early 2022. The company appointed a new CEO for its U.S. operations back in October, hiring head of global product for Block’s Cash App, Conor Walsh, to lead Stateside.

In the U.K., Monzo now claims more than 9 million retail customers and 400,000 clients in the business realm. Its revenues doubled in the most recent financial year, and with a veritable war-chest at its disposal, the company is well-financed to try and emulate some of this success across the pond.

“The huge interest we see from global investors is testament to the momentum and strength of our business model and the commitment of our teams, who put our customers at the heart of everything we do,” Monzo CEO, TS Anil, said in a statement issued to TechCrunch. “With even more rocketfuel for our ambitions and exciting products in the pipeline, there’s no doubt in my mind that the best of Monzo is yet to come.”

EQT snaps up API and identity management software company WSO2 for more than $600M

WSO2, a company that provides API management and identity and access management (IAM) services for enterprises, has been acquired by Swedish investment giant EQT.

Terms of the deal were not disclosed, but TechCrunch has learned via sources that the deal values WSO2 at “more than” $600 million, with EQT attaining a “significant majority” stake for the price.

WSO2’s products include an open-source API manager, comparable to something like Google’s Apigee, which businesses use for building and integrating all their digital services, either in the cloud or on-premises. The company offers tangential services such as API management specifically for Kubernetes, as well as its flagship Identity Server — a little something like Okta — that companies use for managing identity and access functionality in their apps, such as single sign-on (SSO).

WSO2, which was founded out of Sri Lanka in 2005, had raised around $130 million in funding from the likes of Intel, Cisco and Goldman Sachs, with its most recent tranche coming via a $93 million Series E round in 2022. An official valuation was never announced, but articles from some outlets at the time reported a valuation of more than $600 million. So that would mean WSO2 has remained somewhat stagnant, though the “more than” facet here could disguise some movement in the company’s valuation.

A strong track record

WSO2 co-founder and CEO Sanjiva Weerawarana has a strong tack record in the open-source sphere, particularly among Apache Software Foundation projects, and he was one of the main designers of the cloud-native Ballerina programming language. Since 2017, Weerawarana also drives for Uber, which he says is designed to “challenge the norm” and make it more socially acceptable in his native Sri Lanka.

WSO2 is a fairly well-distributed company, in keeping with the ethos of other businesses founded around open source. While the company counts a U.S. HQ in Santa Clara, and many of its senior leadership team are spread across the U.S., its center of gravity lies in Sri Lanka where much of its workforce is based — including Weerawarana, who’s based in the capital Colombo.

With that in mind, it’s worth noting that the acquisition was actually made by an EQT subsidiary called EQT Private Capital Asia, formerly known as Baring Private Equity Asia, which EQT procured in 2022 for €6.8 billion to serve as its private equity vehicle for Asia.

With a global spread of customers that include AT&T, Honda and Axa, this is something that EQT Private Capital Asia partner Hari Gopalakrishnan says was a key part of its decision to invest. Moreover, with cloud computing and AI driving demand for security infrastructure, WSO2 was a particularly appealing proposition for an investment firm with recent form in the enterprise software space.

“Software is a key focus sector for EQT, and WSO2 is a strong company that has scaled globally with an enterprise customer base spread across the US and Europe,” Gopalakrishnan said in a statement. “[We] believe that the company is well-positioned to capitalize on long-term trends such as digital transformation and rising GenAI adoption.”

EQT say that it expects the acquisition to close in the second half of 2024.

Parloa, a conversational AI platform for customer service, raises $66M

Conversational AI platform Parloa has nabbed $66 million in a Series B round of funding, a year after the German startup raised $21 million from a swathe of European investors to propel its international growth.

The company is focusing on the U.S. market in particular, where Parloa opened a New York office last year — it says this hub helped it sign up “several Fortune 200 companies” in the region. For its latest instalment, Parloa has secured Altimeter Capital as lead backer, a U.S.-based VC firm notable for its previous investments in the likes of Uber, Airbnb, Snowflake, Twilio, and HubSpot.

AI and automation in customer service is nothing new, but with a new wave of large language models (LLMs) and generative AI infrastructure, truly smart “conversational” AI (i.e. not dumb chatbots) is again firmly in investors’ focus. Established players continue to raise substantial sums, such as Kore.ai which closed a chunky $150 million round of funding a few months ago from big-name backers such as Nvidia. Elsewhere, entrepreneur and former Salesforce CEO Bret Taylor launched a new customer experience platform called Sierra, built around the concept of “AI agents,” with north of $100 million in VC backing.

Parloa is well-positioned to capitalize on the “AI with everything” hype that has hit fever pitch these past couple of years, as companies seek new ways to improve efficiency through automation.

Founded out of Germany in 2018, Parloa has already secured high-profile customers such as European insurance giant Swiss Life and sporting goods retailer Decathlon, which use the Parloa platform to automate customer communications including emails and instant messaging.

However, “voice” is where co-founder and CEO Malte Kosub reckons Parloa stands out.

“Our strategy has always been centered around ‘voice first,’ the most critical and impactful facet of the customer experience,” Kosub told TechCrunch over email. “As a result, Parloa’s AI-based voice conversations sound more human than any other solution.”

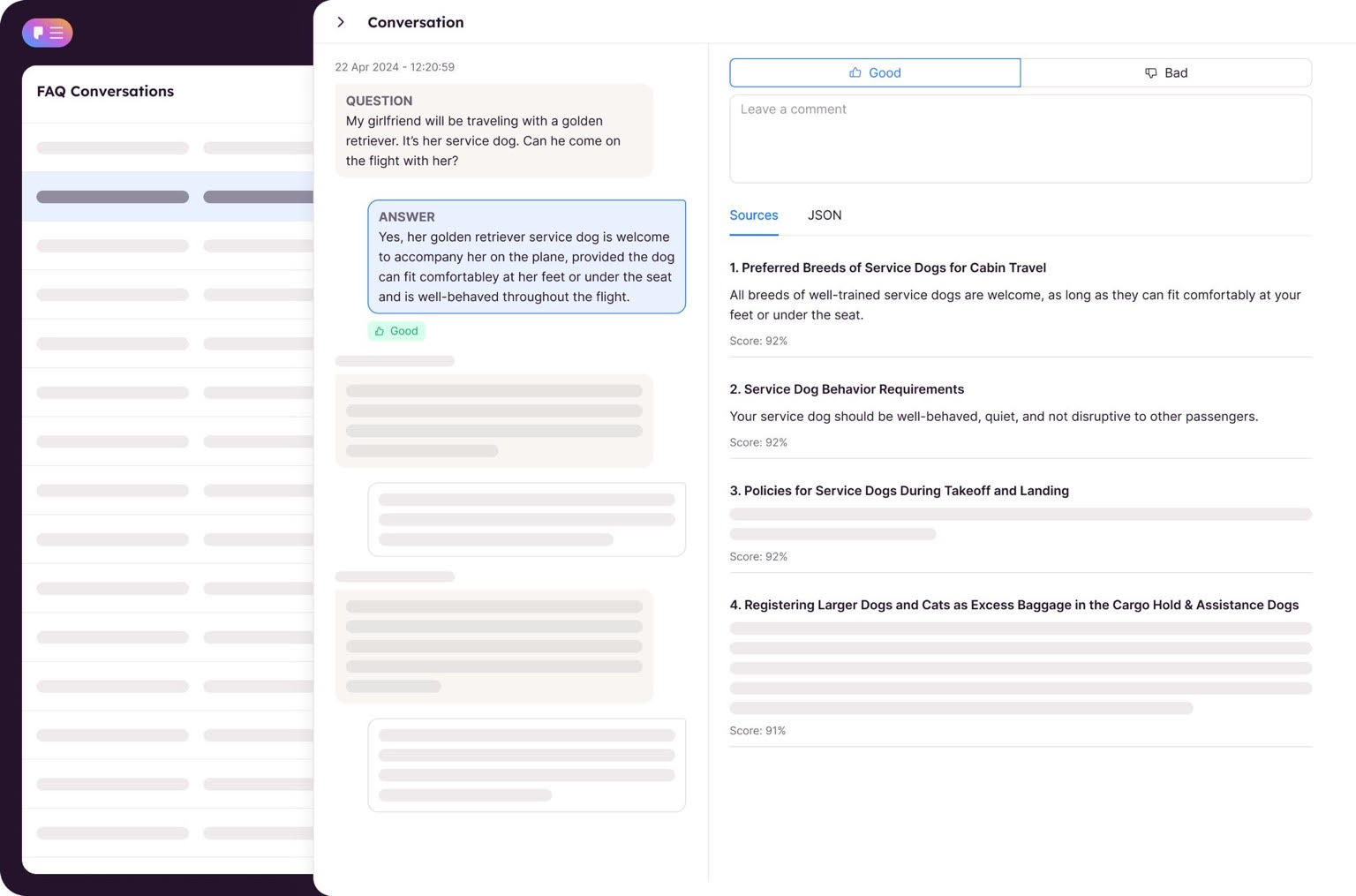

Parloa platform Image Credits: Parloa

Co-founder and CTO Stefan Ostwald says that AI has been a core part of Parloa’s DNA since its inception six years ago, using a mix of proprietary and open source LLMs to train models for speech-to-text use-cases.

“We’ve trained a variety of speech-to-text models on phone audio quality and customer service use cases, developed a custom telephony infrastructure to minimize latency — a key challenge in voice automation — and a proprietary LLM agent framework for customer service,” he said.

Prior to now, Parloa had raised around $25 million, the bulk of which arrived via its Series A round last year. And with another $66 million in the bank, it’s well-financed to double down on both its European and U.S. growth, with Kosub noting that it has tripled its revenue in each of the past three years.

“We successfully entered the U.S. market in 2023 — we’ve always had confidence in the excellence and competitiveness of our product, however the overwhelming and rapid success it achieved in the U.S. surpassed everyone’s expectations,” Kosub said.

Aside form lead investor Altimeter, Parloa’s Series B round included cash injections from EQT Ventures, Newion, Senovo, Mosaic Ventures and La Familia Growth. Today’s funding brings Parloa’s total capital raised to-date to $98 million, following its $21 million Series A funding round led by EQT Ventures in 2023.

French startup FlexAI exits stealth with $30M to ease access to AI compute

A French startup has raised a hefty seed investment to “rearchitect compute infrastructure” for developers wanting to build and train AI applications more efficiently.

FlexAI, as the company is called, has been operating in stealth since October 2023, but the Paris-based company is formally launching Wednesday with €28.5 million ($30 million) in funding, while teasing its first product: an on-demand cloud service for AI training.

This is a chunky bit of change for a seed round, which normally means real substantial founder pedigree — and that is the case here. FlexAI co-founder and CEO Brijesh Tripathi was previously a senior design engineer at GPU giant and now AI darling Nvidia, before landing in various senior engineering and architecting roles at Apple; Tesla (working directly under Elon Musk); Zoox (before Amazon acquired the autonomous driving startup); and, most recently, Tripathi was VP of Intel’s AI and super compute platform offshoot, AXG.

FlexAI co-founder and CTO Dali Kilani has an impressive CV, too, serving in various technical roles at companies including Nvidia and Zynga, while most recently filling the CTO role at French startup Lifen, which develops digital infrastructure for the healthcare industry.

The seed round was led by Alpha Intelligence Capital (AIC), Elaia Partners and Heartcore Capital, with participation from Frst Capital, Motier Ventures, Partech and InstaDeep CEO Karim Beguir.

FlexAI team in Paris

The compute conundrum

To grasp what Tripathi and Kilani are attempting with FlexAI, it’s first worth understanding what developers and AI practitioners are up against in terms of accessing “compute”; this refers to the processing power, infrastructure and resources needed to carry out computational tasks such as processing data, running algorithms, and executing machine learning models.

“Using any infrastructure in the AI space is complex; it’s not for the faint-of-heart, and it’s not for the inexperienced,” Tripathi told TechCrunch. “It requires you to know too much about how to build infrastructure before you can use it.”

By contrast, the public cloud ecosystem that has evolved these past couple of decades serves as a fine example of how an industry has emerged from developers’ need to build applications without worrying too much about the back end.

“If you are a small developer and want to write an application, you don’t need to know where it’s being run, or what the back end is — you just need to spin up an EC2 (Amazon Elastic Compute cloud) instance and you’re done,” Tripathi said. “You can’t do that with AI compute today.”

In the AI sphere, developers must figure out how many GPUs (graphics processing units) they need to interconnect over what type of network, managed through a software ecosystem that they are entirely responsible for setting up. If a GPU or network fails, or if anything in that chain goes awry, the onus is on the developer to sort it.

“We want to bring AI compute infrastructure to the same level of simplicity that the general purpose cloud has gotten to — after 20 years, yes, but there is no reason why AI compute can’t see the same benefits,” Tripathi said. “We want to get to a point where running AI workloads doesn’t require you to become data centre experts.”

With the current iteration of its product going through its paces with a handful of beta customers, FlexAI will launch its first commercial product later this year. It’s basically a cloud service that connects developers to “virtual heterogeneous compute,” meaning that they can run their workloads and deploy AI models across multiple architectures, paying on a usage basis rather than renting GPUs on a dollars-per-hour basis.

GPUs are vital cogs in AI development, serving to train and run large language models (LLMs), for example. Nvidia is one of the preeminent players in the GPU space, and one of the main beneficiaries of the AI revolution sparked by OpenAI and ChatGPT. In the 12 months since OpenAI launched an API for ChatGPT in March 2023, allowing developers to bake ChatGPT functionality into their own apps, Nvidia’s shares ballooned from around $500 billion to more than $2 trillion.

LLMs are pouring out of the technology industry, with demand for GPUs skyrocketing in tandem. But GPUs are expensive to run, and renting them from a cloud provider for smaller jobs or ad-hoc use-cases doesn’t always make sense and can be prohibitively expensive; this is why AWS has been dabbling with time-limited rentals for smaller AI projects. But renting is still renting, which is why FlexAI wants to abstract away the underlying complexities and let customers access AI compute on an as-needed basis.

“Multicloud for AI”

FlexAI’s starting point is that most developers don’t really care for the most part whose GPUs or chips they use, whether it’s Nvidia, AMD, Intel, Graphcore or Cerebras. Their main concern is being able to develop their AI and build applications within their budgetary constraints.

This is where FlexAI’s concept of “universal AI compute” comes in, where FlexAI takes the user’s requirements and allocates it to whatever architecture makes sense for that particular job, taking care of the all the necessary conversions across the different platforms, whether that’s Intel’s Gaudi infrastructure, AMD’s Rocm or Nvidia’s CUDA.

“What this means is that the developer is only focused on building, training and using models,” Tripathi said. “We take care of everything underneath. The failures, recovery, reliability, are all managed by us, and you pay for what you use.”

In many ways, FlexAI is setting out to fast-track for AI what has already been happening in the cloud, meaning more than replicating the pay-per-usage model: It means the ability to go “multicloud” by leaning on the different benefits of different GPU and chip infrastructures.

For example, FlexAI will channel a customer’s specific workload depending on what their priorities are. If a company has limited budget for training and fine-tuning their AI models, they can set that within the FlexAI platform to get the maximum amount of compute bang for their buck. This might mean going through Intel for cheaper (but slower) compute, but if a developer has a small run that requires the fastest possible output, then it can be channeled through Nvidia instead.

Under the hood, FlexAI is basically an “aggregator of demand,” renting the hardware itself through traditional means and, using its “strong connections” with the folks at Intel and AMD, secures preferential prices that it spreads across its own customer base. This doesn’t necessarily mean side-stepping the kingpin Nvidia, but it possibly does mean that to a large extent — with Intel and AMD fighting for GPU scraps left in Nvidia’s wake — there is a huge incentive for them to play ball with aggregators such as FlexAI.

“If I can make it work for customers and bring tens to hundreds of customers onto their infrastructure, they [Intel and AMD] will be very happy,” Tripathi said.

This sits in contrast to similar GPU cloud players in the space such as the well-funded CoreWeave and Lambda Labs, which are focused squarely on Nvidia hardware.

“I want to get AI compute to the point where the current general purpose cloud computing is,” Tripathi noted. “You can’t do multicloud on AI. You have to select specific hardware, number of GPUs, infrastructure, connectivity, and then maintain it yourself. Today, that’s that’s the only way to actually get AI compute.”

When asked who the exact launch partners are, Tripathi said that he was unable to name all of them due to a lack of “formal commitments” from some of them.

“Intel is a strong partner, they are definitely providing infrastructure, and AMD is a partner that’s providing infrastructure,” he said. “But there is a second layer of partnerships that are happening with Nvidia and a couple of other silicon companies that we are not yet ready to share, but they are all in the mix and MOUs [memorandums of understanding] are being signed right now.”

The Elon effect

Tripathi is more than equipped to deal with the challenges ahead, having worked in some of the world’s largest tech companies.

“I know enough about GPUs; I used to build GPUs,” Tripathi said of his seven-year stint at Nvidia, ending in 2007 when he jumped ship for Apple as it was launching the first iPhone. “At Apple, I became focused on solving real customer problems. I was there when Apple started building their first SoCs [system on chips] for phones.”

Tripathi also spent two years at Tesla from 2016 to 2018 as hardware engineering lead, where he ended up working directly under Elon Musk for his last six months after two people above him abruptly left the company.

“At Tesla, the thing that I learned and I’m taking into my startup is that there are no constraints other than science and physics,” he said. “How things are done today is not how it should be or needs to be done. You should go after what the right thing to do is from first principles, and to do that, remove every black box.”

Tripathi was involved in Tesla’s transition to making its own chips, a move that has since been emulated by GM and Hyundai, among other automakers.

“One of the first things I did at Tesla was to figure out how many microcontrollers there are in a car, and to do that, we literally had to sort through a bunch of those big black boxes with metal shielding and casing around it, to find these really tiny small microcontrollers in there,” Tripathi said. “And we ended up putting that on a table, laid it out and said, ‘Elon, there are 50 microcontrollers in a car. And we pay sometimes 1,000 times margins on them because they are shielded and protected in a big metal casing.’ And he’s like, ‘let’s go make our own.’ And we did that.”

GPUs as collateral

Looking further into the future, FlexAI has aspirations to build out its own infrastructure, too, including data centers. This, Tripathi said, will be funded by debt financing, building on a recent trend that has seen rivals in the space including CoreWeave and Lambda Labs use Nvidia chips as collateral to secure loans — rather than giving more equity away.

“Bankers now know how to use GPUs as collaterals,” Tripathi said. “Why give away equity? Until we become a real compute provider, our company’s value is not enough to get us the hundreds of millions of dollars needed to invest in building data centres. If we did only equity, we disappear when the money is gone. But if we actually bank it on GPUs as collateral, they can take the GPUs away and put it in some other data center.”

Reshape wants to help ‘decode nature’ by automating the ‘visual’ part of lab experiments

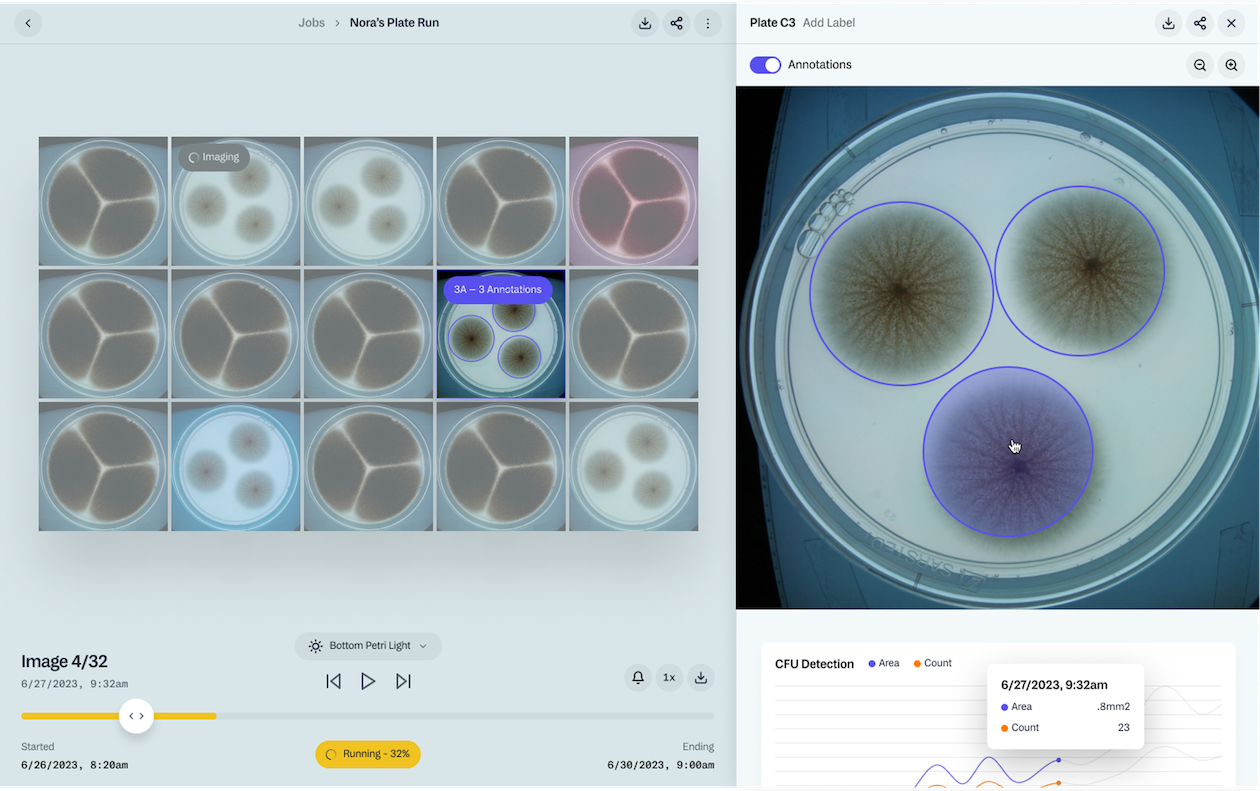

A Danish startup wants to help R&D teams automate lab experiments that require visual inspections, raising $20 million in a Series A round of funding to scale its technology in the U.S.

Reshape, which was founded out of Copenhagen in 2018, has developed a robotic imaging system replete with software and AI models to help scientists track visual changes — such as color or cell-growth rates — from Petri dishes and similar plate formats. Its machines sport built-in incubation that can be set to specific temperatures, with the corresponding data logged to ensure the experiments can be easily repeated.

The benefit is that these experiments can be run 24/7 without direct supervision, freeing up technicians for other critical tasks.

Reshape’s machine in action Image Credits: Reshape

“Decoding nature”

The concept of “decoding nature” sits at the heart of what Reshape is setting out to achieve, building on a broader trend that has seen the lines blur between the natural and manufactured worlds. These opportunities have not been lost on Silicon Valley, evidenced by the countless money poured into technologies seeking to “engineer” biology.

“Biology as a whole is transitioning from a science to an engineering discipline, and I think one of the biggest things that we want to do is make some of the very ‘intangible’ — how does an object grow, how does it behave? — easier to describe,” Reshape CEO Carl-Emil Grøn told TechCrunch. “Ideally, we want to figure out how we make that translation layer between what happens in the real world, and what happens in your DNA.”

The genesis for Reshape came when Grøn, who has an engineering background himself, started dating someone who worked in the biotech industry, giving him insight into the amount of manual effort involved in lab experiments.

“I just assumed that biotech was massively automated, but every eighth hour, of every day, for five months straight, she had to go in to the lab and take a photo of a Petri dish,” Grøn said. “When you are from the tech world, it just seemed crazy.”

After speaking to a bunch of biotech companies in the Copenhagen locale, Grøn realized that his initial experience wasn’t some weird anomaly: The way that labs carry out DNA-sequencing, measure chemical compositions and all the rest was still happening in more or less the same way as it had been done for more than a century.

So Grøn enlisted two co-founders, Daniel Storgaard and Magnus Madsen, and set about building a full-stack platform, replete with high-resolution cameras and lighting, to capture visual data points and time-lapses and record how different components in a given experiment react to the conditions they are subjected to.

Under the hood

Reshape develops its own AI models, trained on in-house data at its own lab, and these can work from the get-go for some of the more common experiment types, such as those involving fungal or bacterial hosts, or seeds and insects. But the company can also help its customers train models for specific use-cases, such as tracking how particular microbes behave under certain conditions.

“The Reshape data science team, using our custom-built MLops architecture, handles this end-to-end, starting from understanding the desired output and quantification, annotating the required datasets at scale, developing and benchmarking models, and then deploying them in our product for our customers,” Grøn said.

An agriculture company, for example, can use Reshape to test for seed germination rates, or the severity of a specific disease. Or a food company can perform ingredient characterization to test for quality, freshness or how the ingredients ripen over time — anything that typically requires a visual assessment.

Growth detected in assay Image Credits: Reshape

Some Reshape customers are using the platform technology to transition from chemical to bio-pesticides — basically, figuring out which new compounds work the best and recording how they were made. And speed is ultimately the main appeal for customers.

“They’ll do like four to 10 times as many experiments as they could before, which just means that they get products to market much, much faster,” Grøn said.

Reshape makes the results available to view in a cloud-based interface, but the platform also supports data exports in format such as LIMS or CSV, allowing users to take their data to other biotech software such as Benchling or even just Excel.

Results are presented through a cloud-based interface Image Credits: Reshape

In terms of accuracy, Grøn says that it compares the underlying models to the performance of a human on that same experiment, covering metrics such as false negatives. This helps avoid scenarios where an experiment might otherwise have been cut short because the scientist thought the experiment was ineffective.

“We help with about an 80% reduction in false negatives,” Grøn said. “We also help our customers reduce how much time they need to get a result. And instead of having to rely on remembering how you did an experiment a few years back, we keep perfect track of it. So every time you run an experiment on the platform, we track it; repeatability is extremely important.”

In terms of business model, Reshape sells the full platform as a subscription, which includes the hardware, machine learning and underlying software. The pricing is charged on a “value-based” pricing model, which can vary for each customer.

For now, Reshape ships just one size of machine, meaning if a customer has lots of experiments, then they must obtain lots of machines. So to scale this to giant industrial-grade experiments, Reshape might need bigger machines; Grøn remained somewhat coy on this matter, but he suggested that they might “branch out” to bigger devices in the future.

Reshape’s imaging machine Image Credits: Reshape

Growth

A graduate of Y Combinator’s (YC) Winter 2021 batch, Reshape has amassed a fairly impressive roster of clients, including Swiss agricultural tech giant Syngenta and the University of Oxford. With another $20 million in the bank, which follows a $8.1 million seed round last year, Reshape says it plans to use its fresh cash injection to scale its business in the U.S., where it says around two-thirds of its revenue already emanates, albeit mostly from its European customers’ U.S. facilities.

“We have proven that our technology works — now it’s about scaling it and helping as many labs as possible to accelerate the biological transition,” Grøn said.

Other are also bringing automation to science labs, including London’s Automata, which raised $40 million last year to target the broader lab workflow. And some companies offer something similar to what Reshape is trying to do, such as Singer Instruments’ Phenobooth and Interscience’s ScanStation.

But by providing a full-stack platform complete with end-to-end data management that’s good to go off the bat, Grøn reckons this is what sets Reshape apart.

“This is an expensive problem that a lot of companies have been trying to solve for a long time,” Grøn said. “We provide the incubation, image capture, and analysis in a closed-loop system. Our pre-trained models are ready to go right out of the box and don’t require time-consuming training.”

Reshape’s Series A round was led by European VC firm Astanor Ventures, with participation from YC, R7, ACME, 21stBio and Unity co-founder Nicholas Francis.